Predictive failure detection is a powerful approach to maintaining equipment and preventing costly breakdowns. By analyzing data from various sources, we can anticipate potential failures before they occur, enabling proactive maintenance and optimizing operational efficiency. This comprehensive guide explores the entire process, from data acquisition to maintenance optimization, and highlights key strategies for successful implementation across various industries.

This method leverages data analytics and machine learning to predict potential equipment malfunctions. It’s a shift from reactive maintenance to a proactive approach, allowing businesses to minimize downtime, reduce repair costs, and enhance overall equipment lifespan. The guide delves into the detailed process of predictive failure detection, covering data collection, model development, deployment, and analysis.

Introduction to Predictive Failure Detection

Predictive failure detection is a proactive approach to maintenance that anticipates equipment failures before they occur. Instead of relying on reactive maintenance, which addresses problems after they manifest, predictive methods leverage data analysis and advanced algorithms to forecast potential issues. This allows for preventative actions, minimizing downtime and maximizing operational efficiency.Predictive failure detection relies on the core principle of monitoring equipment health indicators.

These indicators, which can encompass vibration levels, temperature fluctuations, acoustic emissions, or other parameters, are continuously collected and analyzed. Sophisticated algorithms then identify patterns and anomalies that suggest impending failures. This enables maintenance teams to schedule repairs in advance, preventing catastrophic breakdowns and costly repairs.Implementing predictive failure detection systems offers numerous benefits. Reduced downtime is a primary advantage, as proactive maintenance minimizes the impact of unexpected failures.

This leads to increased operational efficiency and cost savings by avoiding expensive repairs and replacements. Furthermore, predictive maintenance allows for better resource allocation, enabling maintenance teams to focus on critical tasks and optimizing maintenance schedules. Safety is also enhanced, as potential hazards are identified and mitigated before they become critical.Predictive failure detection is widely used across various industries.

Manufacturing plants, particularly those involving heavy machinery, often employ such systems to monitor the health of their equipment. The energy sector, including power plants and pipelines, benefits significantly from predicting equipment failures to ensure reliable energy supply. Transportation industries, like airlines and shipping companies, also utilize predictive maintenance to maintain the integrity and performance of their fleets.

Even in healthcare, predictive maintenance is employed in monitoring medical equipment to ensure reliable operation and patient safety.

Key Stages of a Predictive Failure Detection System

A robust predictive failure detection system follows a structured sequence of stages. These stages, from data acquisition to corrective action, are crucial for the success of the system.

| Stage | Description |

|---|---|

| Data Acquisition | This stage involves collecting relevant data points from various sources, such as sensors, logs, and historical records. The data should be comprehensive, encompassing various parameters and indicators that influence equipment performance. |

| Data Preprocessing | Raw data is often messy and inconsistent. This stage involves cleaning, transforming, and preparing the data for analysis. This includes handling missing values, outliers, and ensuring data quality for accurate insights. |

| Feature Engineering | Extracting relevant features from the preprocessed data is critical for effective analysis. This may involve creating new variables from existing data or selecting the most pertinent indicators for modeling. Identifying patterns and correlations that indicate potential failures is essential. |

| Model Training | A suitable machine learning model is selected and trained using the engineered features. Models like regression, neural networks, or time series analysis can be employed, depending on the specific data and characteristics of the equipment. The model learns to identify the patterns associated with normal operation and deviations that suggest impending failures. |

| Model Validation | The trained model is tested against a separate dataset to assess its accuracy and reliability. This step ensures that the model generalizes well to unseen data and accurately predicts failures. Testing the model against historical data is critical for ensuring the model’s robustness. |

| Prediction and Alerting | The validated model is deployed to predict potential failures. Real-time monitoring and analysis enable the system to generate alerts when critical thresholds are crossed, providing advance warning of impending issues. |

| Corrective Action | Based on the predictions and alerts, maintenance teams can schedule preventive maintenance or repairs. This proactive approach minimizes downtime and prevents catastrophic failures. |

Data Acquisition and Preprocessing

Accurate predictive failure detection hinges on the quality and comprehensiveness of the data used to train models. Data acquisition and preprocessing are crucial steps in this process, ensuring the models are trained on reliable information that reflects the actual operating conditions of the equipment. Effective methods for gathering and preparing data directly impact the accuracy and reliability of the predictions generated.

Methods for Gathering Data

Various techniques are employed to collect data relevant to equipment health. These methods range from simple sensor readings to complex data logging systems. Choosing the appropriate method depends on factors like the type of equipment, the nature of the failure modes, and the available resources.

Predictive failure detection is crucial for maintaining optimal performance, especially in complex systems like vehicle biofiltration systems. These systems, which are becoming increasingly important for emissions control, often require sophisticated monitoring for early signs of component degradation. By implementing advanced sensor technology and data analysis, predictive failure detection can proactively identify potential issues in vehicle biofiltration systems before they lead to costly repairs or operational downtime.

This proactive approach is key to maintaining reliable and efficient vehicle operation.

- Sensors: Sensors are fundamental for monitoring various parameters like vibration, temperature, pressure, and current. For example, vibration sensors can detect subtle changes in equipment vibration patterns that could indicate impending failures in rotating machinery. Acoustic sensors can be used to monitor unusual noises, providing an early warning of potential problems. Using a combination of sensors provides a holistic view of equipment health.

- Data Logging Systems: Specialized data loggers capture and store large volumes of data from various sensors. These systems provide a comprehensive record of equipment performance over time, enabling detailed analysis of trends and patterns. Modern data logging systems are often equipped with sophisticated data analysis tools that facilitate the identification of anomalies and potential failures.

- Machine Learning-based Monitoring: Machine learning algorithms can be employed to automatically monitor equipment performance by identifying anomalies or deviations from expected patterns. These algorithms are trained on historical data to develop a baseline for normal operation, allowing for real-time detection of unusual behavior that could indicate impending failures. This method is particularly useful for complex equipment with multiple interconnected components.

Steps in Data Preprocessing

Raw data collected from sensors and data loggers often needs significant preparation before it can be used for predictive modeling. This preprocessing step typically involves cleaning, transforming, and reducing the data to make it suitable for analysis.

- Data Cleaning: This involves handling missing values, outliers, and inconsistencies in the data. Missing values can be imputed using various techniques, while outliers can be identified and either removed or treated appropriately. Inconsistent data entries are corrected to ensure data integrity.

- Data Transformation: Data transformation aims to improve the quality and suitability of the data for analysis. This may include scaling or normalization of numerical features, converting categorical variables into numerical representations, or applying mathematical functions to improve data distribution. Appropriate transformations are essential to avoid skewing model results.

- Feature Engineering: This involves creating new features from existing ones or extracting meaningful information from the data. New features can provide insights into complex relationships or patterns that may not be apparent in the raw data. This can include calculations based on sensor readings or derived quantities, enhancing the model’s ability to capture crucial characteristics.

- Data Reduction: This step reduces the dimensionality of the data, making it more manageable and computationally efficient for analysis. Techniques like Principal Component Analysis (PCA) or other dimensionality reduction methods can be employed to create new features that capture the most important aspects of the data while reducing noise and irrelevant information.

Challenges in Data Acquisition and Preprocessing

Several challenges can arise during data acquisition and preprocessing.

- Data Quality Issues: Inaccurate or inconsistent data can lead to inaccurate predictions. Data quality issues stem from various sources, including sensor malfunctions, faulty data logging systems, and human errors during data entry. Ensuring data accuracy and reliability is paramount.

- Data Volume and Velocity: Modern equipment often generates massive amounts of data at high speeds. Managing and processing this data can be challenging, requiring sophisticated data storage and processing infrastructure. Real-time analysis of large datasets is essential for timely predictions.

- Data Security: Protecting sensitive equipment data is critical. Data breaches or unauthorized access can compromise the integrity of the system. Robust security measures are needed to safeguard the data.

- Sensor Calibration and Maintenance: Sensors require regular calibration and maintenance to ensure accuracy and reliability. Failure to maintain sensors can lead to inaccurate data, impacting the reliability of predictions.

Importance of Data Quality

Data quality plays a critical role in the accuracy of predictive failure detection models. Inaccurate data can lead to flawed predictions, resulting in either unnecessary maintenance or, worse, equipment failure. Ensuring high data quality throughout the acquisition and preprocessing stages is essential for successful implementation.

Comparison of Data Acquisition Techniques

| Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Sensors | Directly measures physical parameters | Real-time data, high precision | Sensor limitations, calibration needs |

| Data Logging Systems | Records data over time | Comprehensive data history, trend analysis | Storage capacity, data volume management |

| Machine Learning-based Monitoring | Predictive analytics using algorithms | Early failure detection, real-time monitoring | Algorithm complexity, model maintenance |

Feature Engineering and Selection

Feature engineering and selection are crucial steps in predictive failure detection. They involve transforming raw data into a format suitable for machine learning algorithms, focusing on extracting the most relevant information to accurately predict failures. This process significantly impacts the performance of the predictive models, often leading to improved accuracy and reduced false positives.The core idea is to move beyond simply observing raw data points to create new, more informative variables that capture underlying patterns and relationships within the data.

This process of feature engineering helps to create features that better represent the system’s behavior and the likelihood of failure. Feature selection then identifies the subset of these engineered features that are most important for the prediction task.

Feature Extraction Techniques

Feature extraction aims to derive new, meaningful features from the existing raw data. This often involves applying mathematical or statistical transformations.

- Signal Processing Techniques: These techniques are frequently used in time-series data. For instance, extracting features like mean, standard deviation, peak values, and frequency components from vibration signals can reveal critical information about machine health.

- Statistical Measures: Calculations like correlations, averages, and percentiles can reveal relationships between variables and highlight critical trends.

- Domain-Specific Knowledge: Expertise in the specific equipment or process can lead to the creation of features that capture relevant information. For example, in a jet engine, the ratio of fuel consumption to thrust can indicate potential problems.

Feature Selection Methods

Feature selection aims to identify the most relevant features for predictive models. This process often involves eliminating less important features.

- Filter Methods: These methods assess the relevance of features independently of the learning algorithm. Common approaches include calculating the correlation between features and the target variable (e.g., failure indicator) or using statistical tests like chi-square. The selection process is straightforward and computationally inexpensive.

- Wrapper Methods: These methods evaluate feature subsets by using the chosen learning algorithm. They iteratively add or remove features, evaluating the performance of the model at each step. This method can lead to better results but is often computationally expensive, especially with a large number of features.

- Embedded Methods: These methods integrate feature selection within the learning algorithm itself. For example, some machine learning algorithms like LASSO regression automatically select features during the training process. This approach can be efficient and potentially lead to good results.

Impact of Feature Selection on Model Performance

Careful selection of features directly affects the performance of predictive models. A well-chosen set of features can lead to more accurate predictions, improved model efficiency, and reduced computational costs. Conversely, irrelevant or redundant features can negatively impact model accuracy, potentially leading to incorrect predictions and a false sense of confidence in the model.

Example: Feature Engineering with a Simple Dataset

Consider a dataset tracking the operating hours and temperature of a machine.

| Operating Hours (hours) | Temperature (°C) | Failure Indicator (1=Failure, 0=No Failure) |

|---|---|---|

| 100 | 55 | 0 |

| 200 | 60 | 0 |

| 300 | 70 | 1 |

| 400 | 80 | 1 |

From this basic data, we can engineer a new feature: Temperature Deviation, calculated as the difference between the current temperature and the average temperature over the operating hours. This new feature can provide more context to the model, potentially improving its ability to detect failure patterns.

Model Development and Training: Predictive Failure Detection

Developing predictive models for failure detection requires careful selection of machine learning algorithms and rigorous training and validation procedures. This process hinges on the quality of the acquired data and the engineered features, ensuring that the model accurately reflects the underlying patterns and trends. A robust model is critical for identifying potential failures in advance, enabling proactive maintenance and minimizing downtime.

Machine Learning Algorithms for Predictive Failure Detection

Various machine learning algorithms demonstrate suitability for predictive failure detection tasks. Choosing the appropriate algorithm depends on factors such as the nature of the data, the complexity of the failure patterns, and the desired level of accuracy. Popular choices include supervised learning techniques like Support Vector Machines (SVMs), Random Forests, and Gradient Boosting Machines. Unsupervised learning approaches, such as clustering algorithms, can also be valuable for identifying anomalies and potential failure precursors.

Model Training Steps

The training process involves feeding the selected data to the chosen algorithm. This data, which includes historical operational data and corresponding failure information, is divided into training, validation, and testing sets. The training set is used to train the model, while the validation set is used to tune the model’s parameters and prevent overfitting. The testing set provides an unbiased evaluation of the model’s performance on unseen data.

Crucially, the data splitting strategy should be carefully considered to avoid introducing bias.

Model Validation and Testing

Model validation and testing are essential steps in the development process. Validation ensures that the model generalizes well to unseen data, and testing provides an independent assessment of the model’s predictive capability. This involves evaluating metrics such as accuracy, precision, recall, and F1-score to quantify the model’s performance. These metrics help determine the model’s ability to correctly classify instances as either faulty or operational.

For example, a high recall value suggests that the model is effective at identifying actual failures.

Model Validation Techniques

Various validation techniques exist to ensure the reliability of the predictive model. Cross-validation is a common technique where the data is divided into multiple subsets, and the model is trained and tested on different subsets in each iteration. This approach provides a more robust estimate of the model’s performance compared to a single train-test split. Another method is to use hold-out sets, which involves setting aside a portion of the data for final testing, ensuring an unbiased assessment of the model’s predictive ability on completely unseen data.

Moreover, evaluating the model’s performance on different subsets of the data can help uncover potential biases in the data or model.

Machine Learning Algorithms Comparison

| Algorithm | Strengths | Weaknesses |

|---|---|---|

| Support Vector Machines (SVM) | Effective in high-dimensional spaces, robust to outliers. | Can be computationally expensive for large datasets, may require careful parameter tuning. |

| Random Forests | Handles high-dimensional data well, relatively robust to overfitting. | Can be computationally expensive for extremely large datasets. |

| Gradient Boosting Machines (GBM) | High accuracy, capable of handling complex relationships in the data. | Can be prone to overfitting if not carefully tuned. |

| Clustering Algorithms (e.g., K-means) | Useful for identifying anomalies and potential failure precursors in unsupervised settings. | Performance depends heavily on the choice of clustering parameters. |

Model Deployment and Monitoring

Deploying a trained predictive failure detection model is a crucial step in achieving practical value. This involves integrating the model into a real-world system, enabling continuous monitoring of equipment health and proactive intervention. Effective monitoring allows for adaptation to changing operating conditions, ensuring the model’s continued accuracy and reliability.This phase encompasses several critical tasks, from seamless integration to vigilant performance monitoring and proactive adaptation to evolving conditions.

This ensures the model remains effective in predicting future failures in diverse operating scenarios.

Model Deployment Process

Integrating the trained model into the operational system is critical for real-time predictions. Deployment strategies must consider factors such as scalability, latency, and data volume. Different deployment architectures, such as cloud-based solutions or edge computing, can be chosen depending on the specific requirements of the application. Choosing the correct deployment approach is crucial for optimal performance.

Model Performance Monitoring Methods

Continuous monitoring of the model’s performance is essential to maintain accuracy and reliability. Key metrics for monitoring include accuracy, precision, recall, and F1-score, along with evaluation of the model’s ability to detect anomalies. Tracking these metrics allows for identification of potential performance degradation and enables timely adjustments to maintain predictive accuracy. Real-time monitoring systems, regularly updated, ensure that the model’s performance is closely tracked.

Model Adaptation to Changing Operating Conditions

Predictive models often need to adapt to evolving operating conditions to maintain accuracy. Methods include retraining the model periodically with new data to reflect these changes. This ensures that the model’s predictions remain relevant in different operating environments and avoids outdated information that could lead to inaccurate predictions. Regular retraining can mitigate the impact of variations in operating conditions.

An example includes a manufacturing plant where the machine operates at varying speeds, or a wind turbine experiencing different wind patterns.

Model Deployment Architectures

Different architectures support various deployment scenarios. A cloud-based deployment allows for scalability and access from multiple locations. An edge deployment, on the other hand, enables real-time processing of data without relying on a central server, suitable for systems with limited network connectivity. These solutions cater to diverse needs, ensuring seamless integration with the target systems.

- Cloud-based deployment: Leverages cloud computing resources for model hosting and data processing. This architecture allows for scalability and easy access to the model from multiple locations, making it suitable for large-scale deployments. For instance, a company managing numerous manufacturing facilities can leverage a cloud-based solution for centralized model management and real-time monitoring.

- Edge deployment: Places the model directly on the equipment or close to the data source. This minimizes latency and avoids dependence on network communication, ideal for situations with limited or unreliable network connectivity. An example includes a remote oil rig or a system with many distributed sensors.

Model Deployment Lifecycle Flowchart

The following flowchart illustrates the model deployment lifecycle.[Diagram (Flowchart) illustrating the model deployment lifecycle steps. The flowchart should include stages such as model training, deployment, monitoring, adaptation, and retraining. It should visually represent the iterative nature of the process and the feedback loops. The diagram should show how the process can be improved based on performance monitoring results.]

Failure Prediction and Analysis

Predictive failure detection relies heavily on accurate failure prediction models to anticipate equipment malfunctions. This phase involves leveraging the trained models to forecast potential failures, analyze the associated risks, and interpret the results to inform maintenance strategies. Understanding potential failure patterns is crucial for proactive maintenance and minimizing downtime.Analyzing predicted failure risks is vital for prioritizing maintenance actions.

This involves assessing the probability and severity of potential failures, enabling informed decisions about maintenance schedules and resource allocation. A well-structured approach to failure prediction provides a valuable tool for optimizing operational efficiency and reducing the impact of unexpected equipment breakdowns.

Methods for Analyzing Predicted Failure Risks

Predicting potential failures involves assessing the probability and severity of equipment malfunctions. Quantitative risk analysis methods, such as calculating failure probabilities and impact assessments, are often employed. These methods are instrumental in identifying the critical components or systems most likely to fail and thus in need of prioritized maintenance.

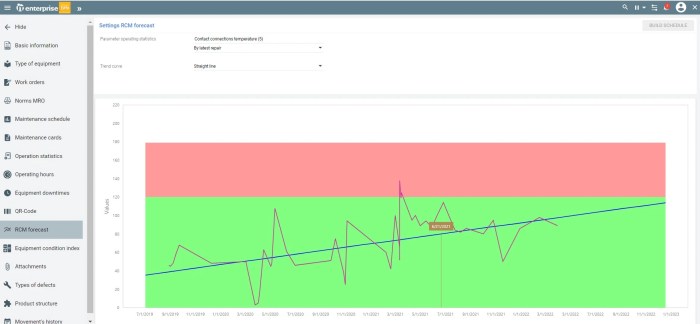

Interpreting Failure Prediction Results

Interpreting failure prediction results requires a clear understanding of the model’s output and the context of the equipment’s operational environment. Visualizations, such as graphs and charts, are commonly used to display predicted failure probabilities over time. These visualizations help identify trends and potential failure patterns. An example could be a graph showing the predicted probability of a pump failing over the next six months, highlighting potential maintenance needs.

Analyzing the model’s output alongside historical data and operational conditions further enhances the interpretation process.

Significance of Identifying Potential Failure Patterns

Identifying potential failure patterns through predictive modeling allows for proactive maintenance strategies. Anticipating failures enables preventive maintenance actions, potentially avoiding costly breakdowns and downtime. Recognizing recurring patterns in equipment performance data can provide insights into the root causes of failures and enable improvements in design or operational procedures. For instance, if a particular type of bearing consistently exhibits similar failure patterns under specific load conditions, corrective measures can be implemented to mitigate these issues.

Failure Prediction Metrics

Understanding the performance of predictive models requires appropriate metrics. These metrics quantify the model’s accuracy and effectiveness in predicting failures.

| Metric | Description | Interpretation |

|---|---|---|

| Accuracy | Proportion of correctly predicted failures. | A high accuracy indicates a well-performing model. |

| Precision | Proportion of predicted failures that are actual failures. | High precision suggests the model is less likely to produce false positives. |

| Recall | Proportion of actual failures that are correctly predicted. | High recall indicates the model effectively identifies all potential failures. |

| F1-score | Harmonic mean of precision and recall. | A balanced measure of precision and recall. |

| AUC (Area Under the ROC Curve) | Measures the model’s ability to distinguish between failure and non-failure cases. | A higher AUC indicates better performance in distinguishing between classes. |

Maintenance Optimization

Predictive failure detection significantly impacts maintenance strategies by shifting from reactive to proactive approaches. This proactive approach, informed by predicted equipment failures, enables optimized maintenance schedules, leading to substantial economic benefits and extended equipment lifespans. This section details the implications of predictive maintenance on optimizing maintenance strategies.

Impact on Maintenance Strategies, Predictive failure detection

Predictive failure detection provides valuable insights into the health of equipment, enabling maintenance teams to anticipate potential failures. This foresight allows for the scheduling of maintenance activities in advance, minimizing downtime and maximizing operational efficiency. Rather than waiting for a component to break down, maintenance can be performed when it is most convenient and cost-effective, based on the predicted failure time.

Optimized Maintenance Schedules

Predictive maintenance facilitates the creation of optimized maintenance schedules tailored to the specific needs of each piece of equipment. Instead of adhering to rigid, pre-determined intervals, maintenance tasks are scheduled based on the predicted remaining useful life (RUL) of components. This approach reduces unnecessary maintenance interventions while ensuring that critical repairs are performed before failures occur. For instance, a pump with a predicted failure in three months can have its scheduled maintenance performed at that time, minimizing potential operational disruption.

Economic Benefits of Proactive Maintenance

Proactive maintenance, driven by predictive failure detection, yields substantial economic benefits. Reduced downtime translates to increased production output and lower repair costs. By preventing catastrophic failures, proactive maintenance avoids expensive repairs and replacements. For example, a predictive maintenance system could identify a high probability of bearing failure in a critical machine. This allows the company to schedule the bearing replacement during a planned downtime, avoiding a complete machine shutdown due to an unexpected failure.

Preventive replacement also reduces the risk of unforeseen expenses.

Impact on Equipment Lifespan

Predictive maintenance contributes to increased equipment lifespan by proactively addressing potential issues. By preventing premature failures, equipment can operate reliably for longer periods. For example, regular monitoring of a turbine’s vibration levels through predictive maintenance could detect anomalies and schedule necessary repairs well in advance, potentially extending the turbine’s operational life by several years. This results in lower overall maintenance costs over the equipment’s lifecycle.

Comparison of Reactive and Proactive Maintenance

| Characteristic | Reactive Maintenance | Proactive Maintenance (Predictive) |

|---|---|---|

| Trigger | Equipment failure | Predicted equipment failure |

| Maintenance Schedule | Unscheduled, often unplanned | Scheduled, based on predictions |

| Downtime | Often significant and disruptive | Minimized, scheduled during planned downtime |

| Cost | Higher due to emergency repairs and lost production | Lower due to reduced downtime and planned maintenance |

| Equipment Lifespan | Potentially shorter due to unanticipated failures | Potentially longer due to proactive intervention |

| Overall Efficiency | Lower due to frequent unexpected failures | Higher due to consistent operation and reduced downtime |

Case Studies and Examples

Predictive failure detection is no longer a futuristic concept; it’s a practical reality impacting various industries. Real-world case studies demonstrate its tangible benefits and highlight the diverse applications of this technology. This section provides examples of successful implementations, showcasing how predictive maintenance strategies are improving operational efficiency and reducing downtime across different sectors.

Successful Implementations in Manufacturing

Predictive maintenance is proving particularly valuable in manufacturing settings. Consider a large automotive parts manufacturer. By implementing a predictive maintenance system, they monitored the vibrations and temperatures of their robotic assembly lines. The system predicted potential failures in the robotic arms, allowing for proactive maintenance before complete breakdowns. This proactive approach reduced downtime by 25%, saved significant repair costs, and improved overall production efficiency.

Another example is a machine shop that employed vibration analysis to predict the wear and tear on its CNC milling machines. The shop implemented preventative maintenance schedules based on the predictions, reducing unexpected machine breakdowns and ensuring consistent production output.

Applications Across Industries

Predictive maintenance isn’t limited to manufacturing. In the aviation industry, airlines are using predictive maintenance to monitor aircraft engines. By analyzing sensor data, the system can anticipate potential issues and schedule maintenance before a catastrophic failure. This approach minimizes the risk of mid-flight emergencies and ensures flight safety. In the energy sector, power plants are utilizing predictive maintenance to detect equipment degradation and optimize maintenance schedules.

Early detection of potential issues prevents costly repairs and ensures the consistent and reliable operation of power grids. Similarly, in the oil and gas industry, predictive maintenance can monitor pipelines and equipment to detect potential leaks or failures. This enables proactive measures to mitigate risks and prevent environmental damage.

Challenges and Solutions in Deployments

Implementing predictive maintenance systems isn’t without challenges. One key challenge is the volume and complexity of data that needs to be analyzed. Effective data acquisition, preprocessing, and feature engineering are critical for successful deployment. A sophisticated machine learning model is required to extract meaningful insights from the vast datasets. Another challenge involves the integration of the predictive maintenance system with existing operational systems.

A well-defined data integration strategy is needed to ensure seamless data flow and consistent operation.

Benefits and Drawbacks of Predictive Maintenance

Predictive maintenance offers numerous advantages, including reduced downtime, lower maintenance costs, improved safety, and enhanced operational efficiency. However, there are also potential drawbacks to consider. The initial investment in hardware, software, and training can be substantial. Data quality is crucial for accurate predictions, and issues with data acquisition or data quality can significantly impact the system’s reliability.

Furthermore, the success of predictive maintenance relies heavily on the expertise of the maintenance personnel to interpret the insights generated by the system. Proper training and ongoing support are vital to maximizing the system’s potential.

Demonstrating the Process in the Oil and Gas Industry

The oil and gas industry benefits greatly from predictive maintenance. A typical process involves acquiring data from various sensors on pipelines and pumps. This data is then preprocessed to remove noise and irrelevant information. Feature engineering techniques are used to extract relevant patterns and trends. A machine learning model is trained on this processed data to predict potential equipment failures.

Finally, the model’s predictions are used to trigger maintenance schedules and prevent costly downtime. This process ensures consistent production, minimizes environmental risks, and optimizes the lifespan of equipment. For example, a pipeline operator using vibration and pressure sensors can predict potential cracks in pipelines, allowing for timely repairs and preventing catastrophic leaks.

Challenges and Limitations

Predictive failure detection, while offering significant potential for optimizing maintenance strategies, faces numerous challenges and limitations. These hurdles stem from the complexity of real-world systems, the inherent variability in data acquisition, and the inherent limitations of the predictive models themselves. Understanding these challenges is crucial for developing robust and effective predictive maintenance systems.

Data Quality Issues

Data quality is a cornerstone of successful predictive models. Inaccurate, incomplete, or inconsistent data can significantly compromise model accuracy and reliability. Errors in sensor readings, data transmission issues, and lack of comprehensive data coverage are common challenges. For example, a sensor malfunctioning intermittently could introduce misleading trends, leading to inaccurate failure predictions. Furthermore, the absence of data for specific operating conditions can hinder the model’s ability to generalize and predict failures under diverse scenarios.

Model Accuracy and Generalization

Predictive models, regardless of their sophistication, are not infallible. Their ability to accurately predict future failures depends on the quality and representativeness of the training data. Models trained on limited or biased datasets may not generalize well to new, unseen operational conditions. This can result in false positives or false negatives, leading to either unnecessary maintenance actions or undetected failures.

Predictive failure detection is crucial in modern engineering, and a key element in ensuring reliable performance. For example, in the automotive industry, companies can use data from test drive campaigns to identify potential mechanical issues early on. This data, combined with advanced analytics, can significantly improve the accuracy of predictive failure detection models.

A common example is a model trained on data from a specific machine type operating under ideal conditions. When deployed on a machine operating under different load profiles, the model may produce inaccurate predictions.

Model Complexity and Interpretability

Advanced machine learning models, while potentially powerful, can be complex and opaque. Understanding the reasoning behind a model’s predictions can be challenging, particularly for complex models like deep neural networks. This lack of interpretability can hinder the identification of critical factors contributing to failure and the implementation of effective countermeasures. Moreover, the complexity of some models can lead to increased computational demands, making real-time deployment challenging.

Real-time Deployment and Scalability

Deploying predictive models in real-time presents significant challenges, particularly for large-scale industrial systems. The volume and velocity of data generated by such systems can overwhelm existing infrastructure, potentially delaying or preventing timely failure predictions. The scalability of the chosen model and infrastructure must be considered to handle the increasing data load associated with the system’s growth.

Table of Potential Limitations of Predictive Methods

| Predictive Method | Potential Limitations |

|---|---|

| Regression Models (e.g., linear regression) | Limited ability to capture complex relationships, susceptible to outliers, may not perform well with non-linear data. |

| Support Vector Machines (SVMs) | Computational intensity, can be difficult to interpret the model’s decision process. |

| Decision Trees | Prone to overfitting, may not generalize well to new data, sensitivity to noisy data. |

| Neural Networks | Black box nature, requires significant computational resources, data dependency, susceptible to overfitting. |

Future Research Directions

Future research in predictive failure detection should focus on developing more robust and interpretable models, enhancing data acquisition techniques, and addressing the scalability issues associated with real-time deployment. This includes exploring hybrid approaches that combine different predictive methods to leverage their respective strengths and investigating the use of explainable AI (XAI) techniques to improve model interpretability. Further research into the development of novel sensor technologies that provide more comprehensive and accurate data is also essential.

Future Trends and Developments

Predictive failure detection is rapidly evolving, driven by advancements in sensor technology, data analytics, and artificial intelligence. This evolution promises to significantly enhance maintenance strategies and reduce operational costs across various industries. The future of this field hinges on seamlessly integrating these advancements to provide more accurate and proactive failure prediction.

Emerging Trends in Predictive Failure Detection

The field is moving beyond basic component monitoring towards holistic system-level predictions. This involves considering the interplay of multiple components and their impact on overall system performance. This shift allows for more sophisticated analyses, enabling predictions about potential cascading failures and proactive maintenance strategies that address these interconnected issues. Real-time monitoring and analysis are becoming increasingly crucial, allowing for rapid responses to emerging problems.

Advancements in Sensor Technology

Sensors are becoming smaller, more sophisticated, and more capable of collecting detailed data. This trend leads to improved data quality and more comprehensive insights into equipment performance. Examples include the growing use of wireless sensors, which enable easier deployment and reduced maintenance needs, and the integration of sensors with advanced data processing capabilities. This allows for more accurate measurements and reduces the need for manual data collection.

Wireless sensors, for instance, are being increasingly deployed in remote or hazardous locations, enabling real-time monitoring without the need for extensive infrastructure.

Advancements in Data Analytics

Data analytics techniques are becoming more sophisticated, enabling more complex models and predictions. Machine learning algorithms, particularly deep learning, are being utilized to extract intricate patterns from large datasets. This trend promises to improve the accuracy of failure predictions and enable more precise estimations of remaining useful life. The availability of powerful computing resources and specialized software packages for data analytics further enhances the potential of predictive maintenance.

For instance, large datasets from numerous machines can be analyzed to identify anomalies and trends that might otherwise go unnoticed.

New Applications for Predictive Failure Detection

Predictive failure detection is expanding beyond traditional industrial settings. This includes applications in healthcare, transportation, and infrastructure management. In healthcare, predictive maintenance can optimize equipment performance and reduce equipment downtime, thereby improving the quality and efficiency of patient care. In transportation, it can ensure the safety and reliability of vehicles, bridges, and other infrastructure components. The potential applications are extensive, demonstrating the broad reach and applicability of this technology.

Role of AI and Machine Learning

AI and machine learning play a critical role in developing more accurate and sophisticated predictive models. These models can learn from vast amounts of data, identify complex patterns, and predict failures with greater precision. Deep learning models, in particular, are proving highly effective in handling complex datasets and extracting intricate relationships that traditional methods might miss. These models are adept at handling the massive and diverse data streams produced by modern sensor networks.

Potential of Integrating Predictive Failure Detection with Other Technologies

Integration with other technologies, such as the Internet of Things (IoT) and cloud computing, significantly expands the potential of predictive failure detection. The IoT facilitates the collection of data from numerous interconnected devices, while cloud computing provides the necessary processing power and storage for handling large datasets. This integration allows for real-time monitoring and analysis of vast quantities of data, further enhancing the accuracy and speed of predictive maintenance strategies.

This synergy is expected to improve the overall efficiency and reliability of industrial processes.

Last Word

In conclusion, predictive failure detection offers a significant advantage in optimizing maintenance strategies and improving equipment reliability. By understanding the entire process, from data acquisition to maintenance optimization, businesses can make informed decisions, minimize downtime, and enhance operational efficiency. The future of predictive maintenance looks bright, promising further advancements and widespread adoption across diverse industries.

Essential FAQs

What are some common challenges in data acquisition for predictive failure detection?

Data quality issues, inconsistent data formats, and a lack of historical data are common challenges. Ensuring data accuracy and completeness is crucial for building reliable predictive models.

What types of machine learning algorithms are commonly used in predictive failure detection?

Various machine learning algorithms, including but not limited to, Support Vector Machines (SVMs), Random Forests, and Neural Networks, are used in predictive failure detection. The choice of algorithm depends on the specific application and the nature of the data.

How does predictive maintenance impact equipment lifespan?

Predictive maintenance leads to proactive maintenance schedules, thereby extending equipment lifespan and reducing unplanned downtime. This results in improved reliability and reduced maintenance costs.

What are some key metrics used to evaluate the success of a predictive maintenance system?

Key metrics include prediction accuracy, false positive rate, and the return on investment (ROI). These metrics help evaluate the effectiveness and cost-efficiency of the system.